TL;DR

In this blog post, I talk about how I spend 3 hours setting up a chat bot that can recognize batteries using the Microsoft Custom Vision API.

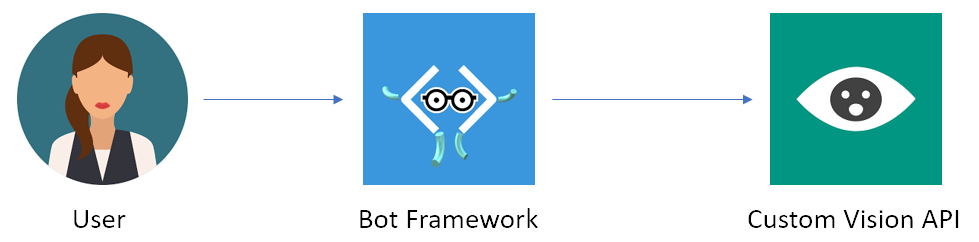

Recently, a colleague asked me to create a small demo for a talk he was doing on Azure and the Bot Framework. The talk was for a conference that dealt with environmental issues, and the demo was only needed for a couple of minutes worth of time. I decided to write a bot that you could upload images to, and then have the bot tap into the Computer Vision API to identify what’s in the image:

Custom Vision API

The Custom Vision API let’s you train a machine learning model to recognize a specific set of images, and then tag them so that you can later identify other images containing the same subject. I know! It’s fuzzy, let’s see if it becomes clearer during this post.

I decided to dive into this one first, as I knew I wanted to connect my bot to this once I had something to connect to. Setting up the Custom Vision API (from here on: CVA) was straight forward:

- Navigate to the Azure Portal and set up a new resource group for the bot and CVA

- Within the Resource group, add a Custom Vision Service

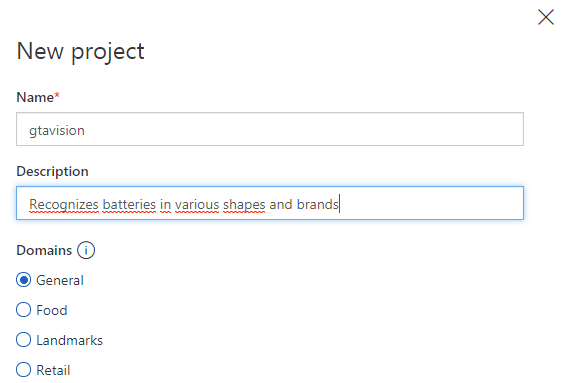

Once you’ve created the service, click on quick start and navigate to the Custom Vision Portal. Here, I selected my resource group and clicked on New Project:

Once created, you upload a bunch of images, minimum 5, to train the model with. You need to set at least 2 tags for each image, and have a minimum of 5 images to start with. I uploaded 12 images, and tagged as follows:

- All images are tagged Batteries

- Images with only one battery also are tagged with Battery

- Images of only Duracell batteries are tagged with Duracell

I then hit the Train button top right to teach CVA to recognize those batteries. All that was left was to click the Prediction URL to fetch the details of the API for use in my Bot.

You need to copy the Image File URL as well as the Prediction-Key values to use in the Bot project.

Bot Framework

The Bot Framework is a fantastic tool to provide conversational abilities to a whole range of channels such as web, Skype, Facebook and so on. For this demo, I only wanted to focus on delivering a file upload functionality so that I could quickly tap into the CVA for classification.

Introductory message upon conversation update

I wanted the bot to just quickly deliver an instruction upon conversation start. To avoid duplicate messages, you’ll want to verify that the user joining the conversation is not the bot itself, thus:

else if (message.Type == ActivityTypes.ConversationUpdate)

{

foreach(var addedMember in message.MembersAdded)

if(addedMember.Id != message.Recipient.Id)

{

var reply = message.CreateReply(

$"Welcome to GTA, {addedMember.Name}! Try sending me a JPEG.." +

$" I LOVE processing JPEG images, ESPECIALLY if they have batteries in them!!!");

var client = new ConnectorClient(new Uri(message.ServiceUrl), new MicrosoftAppCredentials());

await client.Conversations.ReplyToActivityAsync(reply);

}

Receiving an uploaded file

Handling uploaded files is a breeze in the bot framework. You simply look for attachments to the incoming message, and then convert that into a byte array:

private async Task<byte[]> GetImageDataAsync(Activity activity)

{

var url = activity.Attachments[0].ContentUrl;

var httpClient = new HttpClient();

return await httpClient.GetByteArrayAsync(url);

}

It really doesen’t get much easier than that!

Obtaining a prediction

The last bit of “complicated” (haha, really not!) code that was needed was to create a class to handle the CVA. The main method to look at is the GetPrediction() method:

public async Task<VisionResponse> GetPrediction(byte[] imageData)

{

var content = new ByteArrayContent(imageData);

content.Headers.ContentType = new MediaTypeHeaderValue("application/octet-stream");

var response = await _httpClient.PostAsync(_apiUrl, content);

if(response.IsSuccessStatusCode)

{

var text = await response.Content.ReadAsStringAsync();

return JsonConvert.DeserializeObject<VisionResponse>(text);

}

return null;

}

Note: The _apiUrl is a class member that was initialized in the class constructor.

All done

That was all the stitching required to get this bot to work. Now I had an easy way to extract the image bytes from an uploaded attachment to the bot, and I could send those bytes over to CVA for content prediction:

private async Task MessageReceivedAsync(IDialogContext context, IAwaitable<object> result)

{

var activity = await result as Activity;

// Check if the user actually uploaded a JPEG file

if(activity.Attachments.Any() && activity.Attachments[0].ContentType == "image/jpeg")

{

var contentData = await GetImageDataAsync(activity);

var visionApiUrl = CloudConfigurationManager.GetSetting("VisionApiUrl");

var predictionKey = CloudConfigurationManager.GetSetting("VisionPredictionKey");

var visionApi = new GtaVisionApi(visionApiUrl, predictionKey);

var visionResult = await visionApi.GetPrediction(contentData);

// I only wanted to deal with 80% or better scores

var scores = visionResult.Predictions.Where(p => p.Probability > 0.8);

// Provide a fitting response to the contents in the image

await ReactToScores(context, activity, scores);

}

else

{

await context.PostAsync("I've no idea how to deal with that! Try sending me an image?");

}

context.Wait(MessageReceivedAsync);

}

That’s the final code in my RootDialog class. If you’re interested in trying this out, I’ve provided a link to the code on GitHub so you can clone and try it out for yourself. The bot will basically warn you about how to safely dispose batteries, however, when it predicts a duracell battery, it shows the Duracell Bunny (since we all were fed that those Batteries basically live forever, hehe)

Conclusion

I spent a total of 3 hours playing around with this stuff and completing the CVA project, the Bot Code and publishing it to Azure. It amazes me how fast the CVA learns what’s in the images! Utilizing Machine Learning / Artificial Intelligence for classifying photos opens up a whole can of possibilities, and bringing those together with the Bot framework are a good recepy for getting alot done in no time at all!

Thank you for sharing