How I do error handling in my REST APIs

As I make more and more REST APIs, I find the need to bubble up an object that will translate to an Http 200 OK result or an RFC 7807 …

ReadMy shared experiences and advice in software

As I make more and more REST APIs, I find the need to bubble up an object that will translate to an Http 200 OK result or an RFC 7807 …

Read

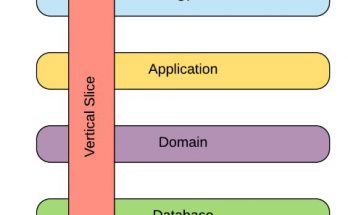

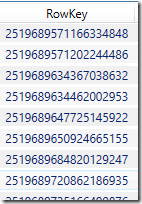

This is a reminder to myself more than anything else. It is not often that I get to greenfield a new project. Most of the time, the solution already exists, …

Read

In a recent partner hackfest, the need to store a Username/Password combination in Azure Key Vault arose. Setting up Key Vault instance was dead easy: Sign in to the Azure portal …

Read

TL;DR In this blog post, I talk about how I spend 3 hours setting up a chat bot that can recognize batteries using the Microsoft Custom Vision API. Recently, a …

Read

What is Application Insights Anyway? Application Insights is an extensible Application Performance Management (APM) service for web developers on multiple platforms. Use it to monitor your live web application. It …

Read

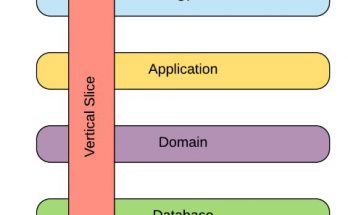

In this post, I explain how I made the program “SubWordsFinder” that takes a string, and finds all real English words that contain that string, then removes the string from …

Read

Apologies for the long absence of information regarding my Plant watering project Today I hung up a lamp above my plant in order to provide it with enough light as …

Read

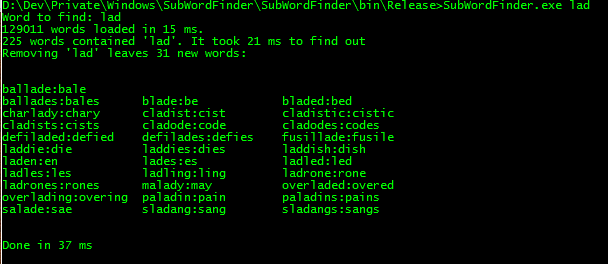

Sometimes, all you want is to be able to quickly get to the last value of a sensor, or the freshest product in your Azure Table without having to do …

ReadGrowing concerns about the direction of Xaml-based applications Microsoft, what the hell do you think you are doing by diverging WPF, Silverlight and Silverlight for WP7?? None of those 3 …

ReadA quick introduction to how you can get up and running with Microsoft Azure – It is a hands-on guide into creating a silverlight application that uses a REST api …

Read